Anyone can automate end-to-end tests!

Our AI Test Agent enables anyone who can read and write English to become an automation engineer in less than an hour.

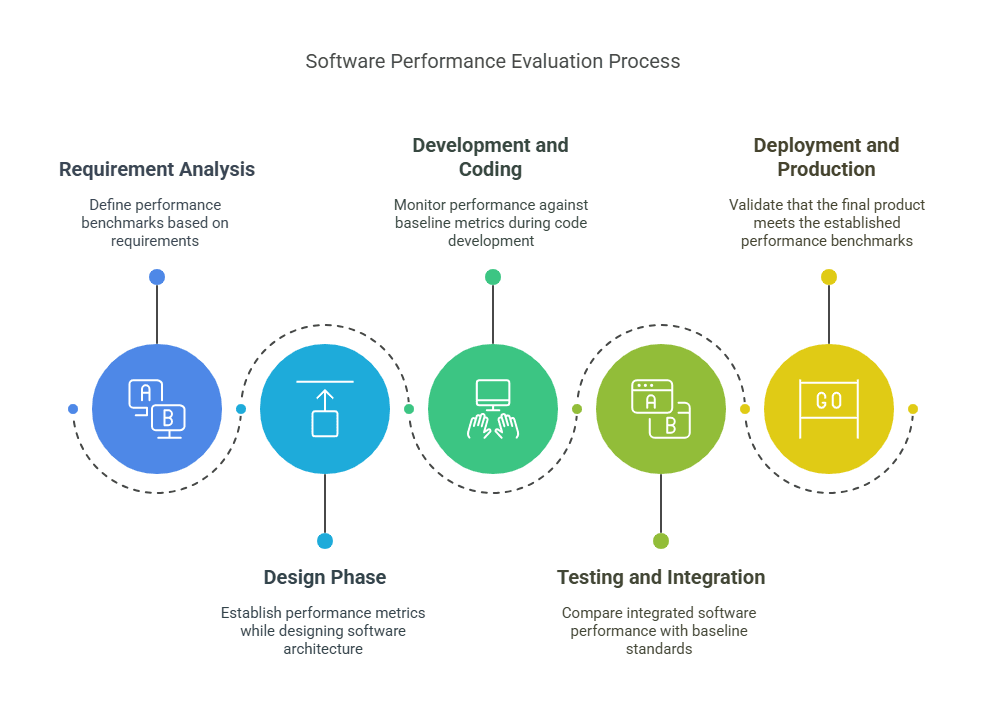

Maintaining consistent performance and reliability in software is crucial, especially when updates and changes are frequent. Ensuring that new features or fixes don’t disrupt the application’s stability requires a clear performance benchmark—a snapshot of your software’s current state to measure the impact of future changes.

By systematically capturing key metrics, baseline testing enables teams to detect performance issues early, track improvements, and keep the system stable as it evolves. In this guide, we’ll explore the essential steps of baseline testing and explain why it’s a vital practice for delivering reliable, high-quality software.

Baseline testing sets a clear reference point for evaluating system performance over time.

It helps detect inconsistencies and track how changes or updates affect the system’s stability and efficiency.

Identifying potential performance issues early reduces the risks of major failures or slowdowns after deployment.

Monitoring baseline metrics helps allocate system resources effectively, ensuring the application performs optimally.

It facilitates trend analysis, allowing teams to manage and optimize software performance proactively.

Regular comparisons against baselines help maintain stability.

Baselines act as reference points to assess the impact of code changes.

Stable and high-performing applications lead to a better user experience.

Meeting performance standards ensures compliance with Service Level Agreements (SLAs).

Begin by identifying the key performance indicators or QA KPIs that reflect the application’s health and efficiency. These indicators can include:

How quickly the system responds to user inputs.

The volume of transactions processed in a given timeframe.

How much memory the application consumes under normal conditions.

Frequency and types of errors occurring during typical use.

These KPIs will serve as the criteria against which the system’s performance is measured.

Simulate real-world conditions and execute the initial tests to gather the baseline metrics. This includes:

Running the software with representative workloads or user interactions.

Capturing data using performance monitoring tools.

Setting the environment similar to the production environment to reflect realistic conditions.

Carefully document all the baseline metrics and the conditions under which they were collected. This includes:

Noting the test environment specifications (hardware, software versions, configurations, etc.).

Recording the results of the initial tests, detailing each KPI measurement.

Storing the test cases and scripts used during baseline testing for future reference.

After changes are made (such as code updates, feature additions, or bug fixes), re-run the tests:

Use the same test scenarios and conditions as established during the baseline phase.

Compare the new test results against the recorded baseline metrics.

Identify deviations, such as increased response times, higher memory usage, or increased error rates.

If deviations or regressions are detected:

Analyze the differences between the current and baseline metrics to pinpoint potential issues.

Use performance monitoring tools to track down specific bottlenecks or areas of concern.

Implement optimizations to improve performance or rectify issues

Re-test and validate the changes against the baseline metrics to confirm improvements.

Following these steps ensures that baseline testing maintains system consistency and quality, while also helping teams proactively manage and optimize the software’s performance over time.

Consider a web application where baseline testing is performed to measure the page load time:

During initial testing, the homepage load time is measured at 3 seconds.

After implementing new features or updates, the homepage load time is re-tested and found to be 2.8 seconds.

The comparison shows an improvement of 0.2 seconds in load time, indicating that the updates positively impacted performance.

This example illustrates how baseline testing establishes a reference point (3 seconds load time) and measures changes after updates to ensure continuous improvement.

Baseline Test Type refers to the specific kind of baseline testing conducted to achieve a particular objective. Baseline test types generally include:

Focuses on capturing performance metrics such as response time, throughput, resource utilization, and error rates.

Establishes the standard functionality of an application to compare future functional changes.

Records the initial configuration settings of a system to track changes and ensure consistency.

Establishes security standards and policies to measure and monitor adherence over time.

Each type serves as a benchmark to maintain quality and stability in different areas of the software system.

Baseline testing is an essential part of maintaining software quality. It establishes a performance benchmark, identifies issues early, and enables continuous optimization, ensuring that applications remain reliable and efficient. By implementing baseline testing, organizations can deliver high-quality software and enhance user satisfaction.

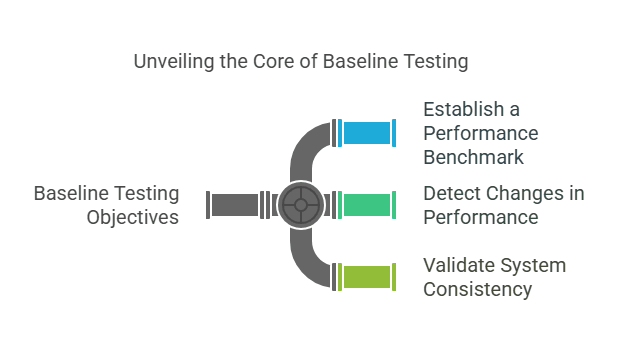

The objective of baseline testing is to establish a performance benchmark for a software application. It provides a reference point to measure and compare the system's behavior over time, helping to detect deviations, improvements, or issues.

Baseline testing involves recording the initial performance metrics of a system to serve as a comparison for future tests. For example, if a web application’s homepage initially loads in 3 seconds, this is recorded as the baseline. After updates or changes, subsequent tests measure if the homepage still loads within or faster than this baseline time, indicating consistent or improved performance.

Baseline testing is crucial to ensure software consistency and reliability. It helps track performance changes, detect potential issues early, maintain system stability, optimize resource usage, and adhere to performance standards over time.

Written by

VIVEK NAIR

Vivek has 16+ years of experience in Enterprise SaaS, RPA, and Software Testing, with over 4 years specializing in low-code testing. He has successfully incubated partner businesses and built global GTM strategies for startups. With a strong background in software testing, including automation, performance, and low-code/no-code testing solutions, he ensures high-quality product delivery and innovation in the testing space.

Our AI Test Agent enables anyone who can read and write English to become an automation engineer in less than an hour.